Linux namespace in Go - Part 3, Cgroups resource limit

In the previous article, I did two experiments on what isolation it brings with UID and Mount, this article explains how to limit the container’s resource by using Cgroups, for instance, CPU, memory resources.

The series of Linux namespace in Go:

- Linux namespace in Go - Part 1, UTS and PID

- Linux namespace in Go - Part 2, UID and Mount

- Linux namespace in Go - Part 3, Cgroups resource limit

Cgroups

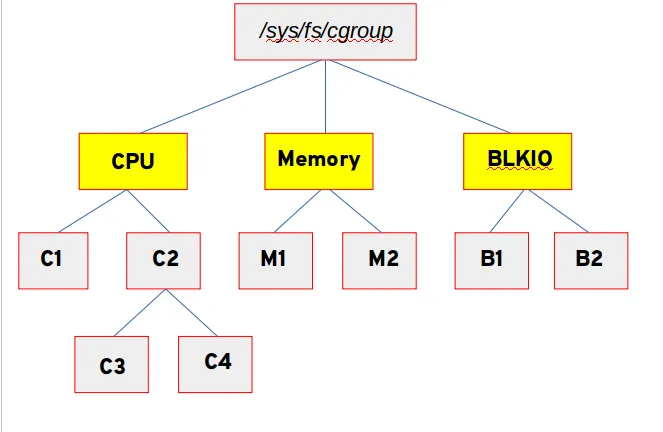

Control groups, usually referred to as cgroups, are a Linux kernel feature which allow processes to be organized into hierarchical groups whose usage of various types of resources can then be limited and monitored. The kernel’s cgroup interface is provided through a pseudo-filesystem called cgroupfs. Grouping is implemented in the core cgroup kernel code, while resource tracking and limits are implemented in a set of per-resource-type subsystems (memory, CPU, and so on).

We can use cgroups to control the container’s resource usage, it’s necessary when we have many containers running in a host machine, it prevents some container from consuming too much resources therefore the other containers would run out of CPU, memory, etc. The interface we setup the resource limit is Linux I/O interface, you can simply write to the cgroups configuration files and it will take effect immediately.

How to setup cgroup limit?

Cgroup configuration is organized by file system hierachy, for convention, the cgroup directory is mounted under /sys/fs/cgroup, the separate resource configuration directories are placed under some paths like /sys/fs/cgroup/cpu/user/user1, this is the configuration for user1’s processes.

Cgroup configuration is applied to the processes, if the parent process’s resource is limited, its child processes are also automatically limited according to its parent cgroup limit. The process list is stored under /sys/fs/cgroup/cpu/user/user1/cgroup.procs, after you add the process ID to the file, the processes it spawns will be added to the file automatically.

Resource Types

There are different resource types that you can specify for your process, they’re called controllers.

cpu

You’re allowed to setup both soft and hard limits to the CPU shares your processes can use, soft means if the CPU is not busy, it would specify more CPU shares to the process otherwise it would not. Hard means no matter the CPU is busy or not, the process could not use more that the specified limit.cpuacct

The CPU accounting controller is used to group tasks using cgroups and

account the CPU usage of these groups of tasks.

The CPU accounting controller supports multi-hierarchy groups. An accounting

group accumulates the CPU usage of all of its child groups and the tasks

directly present in its group.

cpuset

This cgroup can be used to bind the processes in a cgroup to a specified set of CPUs and NUMA nodes.memory

The memory controller supports reporting and limiting of process memory, kernel memory, and swap used by cgroups.devices

This supports controlling which processes may create (mknod)

devices as well as open them for reading or writing. The

policies may be specified as allow-lists and deny-lists.

Hierarchy is enforced, so new rules must not violate existing

rules for the target or ancestor cgroups.freezer

The freezer cgroup can suspend and restore (resume) all processes in a cgroup. Freezing a cgroup /A also causes its

children, for example, processes in /A/B, to be frozen.net_cls

This places a classid, specified for the cgroup, on network

packets created by a cgroup. These classids can then be used

in firewall rules, as well as used to shape traffic using

tc(8). This applies only to packets leaving the cgroup, not

to traffic arriving at the cgroup.blkio

The blkio cgroup controls and limits access to specified block devices by applying IO control in the form of throttling and upper limits against leaf nodes and intermediate nodes in the storage hierarchy.perf_event

This controller allows perf monitoring of the set of processes grouped in a cgroup.net_prio

This allows priorities to be specified, per network interface, for cgroups.hugetlb

This supports limiting the use of huge pages by cgroups.pids

This controller permits limiting the number of process that may be created in a cgroup.rdma

The RDMA controller permits limiting the use of RDMA/IB-specific resources per cgroup.

I would take CPU and memory controllers as example in the following exercises.

CPU controller

This introduces how to setup CPU limits for the process, in this case, I wanna limit the CPU hard limit to 0.5 cores.

First, we need to create an isolated group for this CPU limit, as I said before, the configuration is usually under /sys/fs/cgroup, let’s create a new folder for this, we call this /sys/fs/cgroup/cpu/mycontainer.

1 | sudo mkdir -p /sys/fs/cgroup/cpu/mycontainer |

Then, we set the CPU hard limit to 0.5 cores, there are two parameters

- cpu.cfs_period_us

the total available run-time within a period (in microseconds) - cpu.cfs_quota_us

the length of a period (in microseconds)

The actual schedule run-time of the process will be cpu.cfs_quota_us microseconds of cpu.cfs_period_us microsends, so to use only 0.5 cores, we can specify 5000 out of 10000.

1 | sudo su |

Finally, we put the process of a Bash script to the cgroup.procs file,

1 | bash |

You can test the CPU usage with yes > /dev/null and use htop to monitor the current CPU usage, it will be around 0.5 core used by the yes command.

Memory Controller

Similar to the CPU controller, let’s have a look at the memory cgroup configurations.

- tasks # attach a task(thread) and show list of threads

- cgroup.procs # show list of processes

- cgroup.event_control # an interface for event_fd()

- memory.usage_in_bytes # show current usage for memory

- memory.memsw.usage_in_bytes # show current usage for memory+Swap

- memory.limit_in_bytes # set/show limit of memory usage

- memory.memsw.limit_in_bytes # set/show limit of memory+Swap usage

- … For more details, check Memory Cgroup

To setup the memory hard limit for the process, first we create a cgroup folder for this and write to the memory.limit_in_bytes. After that, we added the process ID to the cgroup.procs.

1 | sudo mkdir -p /sys/fs/cgroup/memory/mycontainer |

Cgroups in Golang

Cgroup in Golang is equivalent to the commands I have executed before, the example code you can access in the exercise05.

1 | func addProcessToCgroup(filepath string, pid int) { |

The update to cgroup directories needs root permission, we not only need to use sudo to run the program, but also need to modify the SysProcIDMap, use the command line arguments to setup the UID and GID mapping to the root user & group in the container, in my case it’s the current non-root user I use, so I use -uid=1000 -gid=1000.

1 | sudo NEWROOT=/home/srjiang/Downloads/alpine_root go run exercise05/main.go -uid=1000 -gid=1000 |

After you run the program, you’ll find the process of the program in the /sys/fs/cgroup/cpu/mycontainer/cgroup.procs, it’s up to you to modify the cgroup configuration like CPU limits and memory limits on your own.

What’s Next?

We’ve had a container with its own file system, isolated user namespace and PID namespace, and we can limit the resource of this container, next, we want to bring the network to this container.