How to set up a reasonable memory limit for Java applications in Kubernetes

This article introduces some discovery of the Java memory usage in Kubernetes and how to set up a reasonable memory request/limit based on the Java heap requirement and the memory usage of the application.

Context

The trigger for me to look up the memory usage of Java applications in Kubernets is the increased OOM events in production, after investigation, it was not caused by JVM heap shortage, so I need to investigate where the non-heap memory goes.

(If you’re not familiar with the OOM events, you can check the article “How to alert for Pod Restart & OOMKilled in Kubernetes

“)

Container Metrics

There are several metrics for memory usage in Kubernetes,

container_memory_rss(cadvisor)

The amount of anonymous and swap cache memory (includes transparent hugepages).container_memory_working_set_bytes(cadvisor)

The amount of working set memory, this includes recently accessed memory, dirty memory, and kernel memory. Working set is <= “usage” and it equals to Usage minus total_inactive_file.resident set size

It is container_memory_rss + file_mapped (file_mapped is accounted only when the memory CGroup is owner of page cache)

For Kubernetes, it depends on container_memory_working_set_bytes to oom-kill the container which exceeds the memory limit, we’ll use this metric in the following sections.

Heap Usage << Memory Limit

After we noticed several OOMs in the production, it’s time to figure out the root cause. According to the JVM metrics, I found the heap size was way less than the Kubernetes memory usage, let’s check an sample, its memory usage upper limit is as high as 90% of the total memory size.

initial and max heap size is 1.5G Set by XX:InitialHeapSize=1536m -XX:MaxHeapSize=1536m -XX:MaxGCPauseMillis=50

Kubernetes request and memory limit is 3G set by deployment.yaml,

1 | resources: |

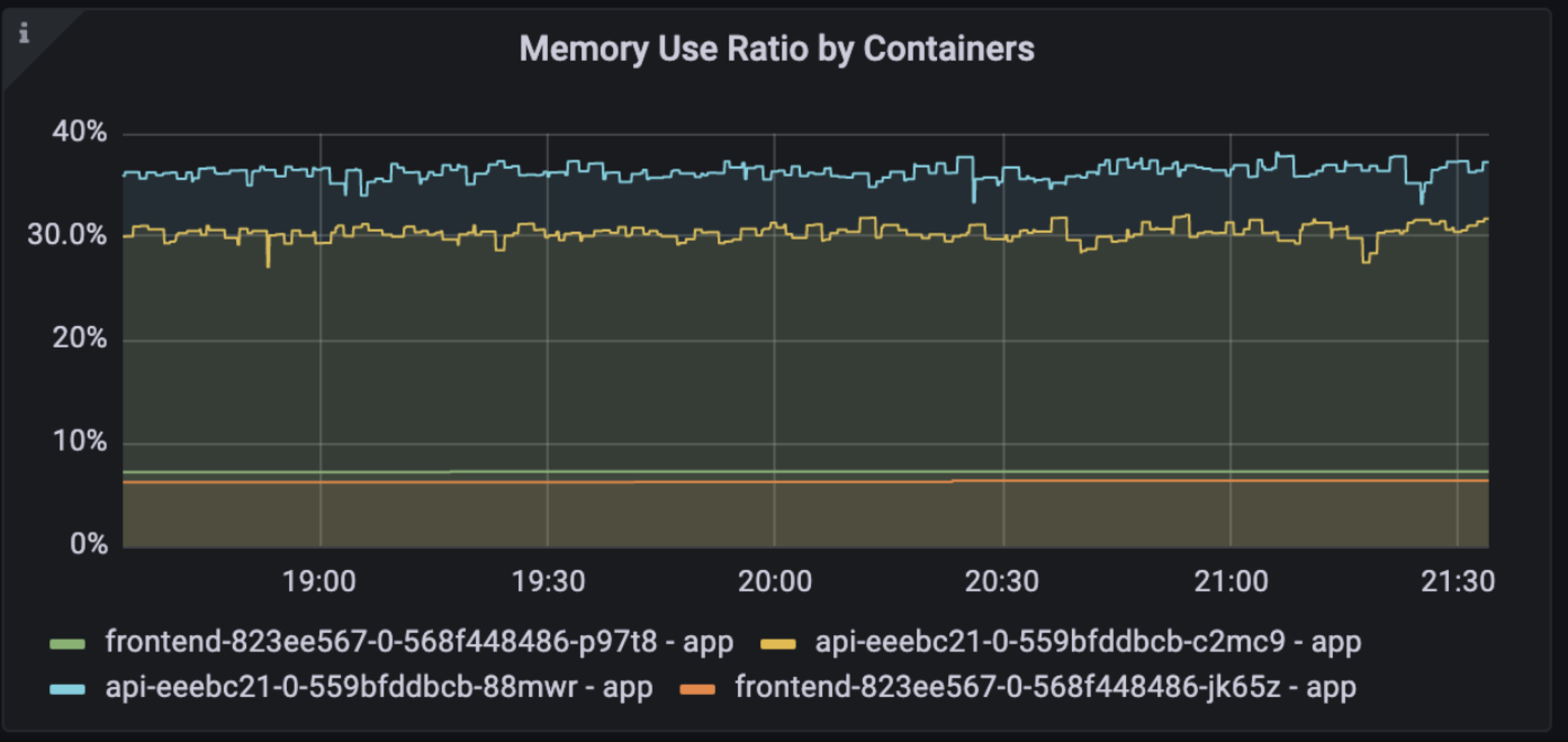

In the monitoring dashboard, Kubernetes memory usage is close to 90% of the memory limit which is 2.7G

In other words, the non-heap memory took 2.7G-1.5G = 1.2G.

A close-up on Java memory

JVM contains heap and non-heap memory, let’s take a sample. The data is from an application with XX:InitialHeapSize=1536m -XX:MaxHeapSize=1536m -XX:MaxGCPauseMillis=50

Heap

If we set the max size of the heap, we can consider the upper limit is fixed and heap size is 1.5G, we can divide the heap memory into Eden, Survivor, Old regions if we were using G1 as our GC algorithm.

We can check heap info by jcmd, as we can see the used heap 593M is way less than the committed size 1.5G, so we are good with the heap usage.

1 | bash-4.2$ jcmd 99 GC.heap_info |

To get more debug information, I enabled the native memory tracking (switch on Native memory tracking by adding XX:NativeMemoryTracking=[off | summary | detail] to the Java options) in the app, I chose detail to show more details of the memory usage.

After the application ran for 5 days, the memory usage was increasing slowly to 78.29% of the memory limit (3G), namely 2.35G.

Let’s use jcmd to show where the memory went,

1 | bash-4.2$ jcmd 99 VM.native_memory |

As the output said, the total committed memory is 2.18G, committed heap size is the same as we specified, 1.5G.

For the other sections, Internal took 362M (371646KB), GC took 128M (131736KB), Class took 89M (91739KB), Code took 47M (48115KB), Symbol took 18M (18735KB), Thread took 7M, …

(Didn’t take Native Memory Tracking into account because it was the overhead of the tracing, not the real situation in production.)

Since I set an NMT baseline early, we can run jcmd <process-id> VM.native_memory detail.diff to know which method consumed the memory.

As the output below (full output), I omitted some sections which didn’t increase a lot. Compared to the baseline, the total committed memory increased 437M (448201KB), the Internal section increased the most, 361M (373425KB).

1 | Native Memory Tracking: |

You can find out the memory in Internal was consumed by TraceMemoryManagerStats::~TraceMemoryManagerStats() which is related to GC, so it seems GC will create some GC data and the data size is slowly increasing. GC+Internal consumed 493M. So now we know where the non-heap memory goes.

G1 Tuning?

Java 11 uses G1 as the default GC algorithm, CMS (Concurrent Mark Sweep) is deprecated and Java 11 mentioned

The general recommendation is to use G1 with its default settings, eventually giving it a different pause-time goal and setting a maximum Java heap size by using -Xmx if desired.

So that means by using G1, a complicated configuration is not that necessary, you just need to make a wish and G1 will try its best to implement it. It also indicates a bit why G1 will consume more and more memory, it might gather some information about the memory behavior to optimize the memory allocation.

To fit a Java application to the Kubernetes, we need to specify several things:

InitialHeapSize and MaxHeapSize

Setting this is to limit the heap memory, setting them to the same value will reduce the heap resizing. You can either set the MaxHeapSize to a static value based on the usage or to a ratio of the memory limit like 50%, 60% depending on your real usage. So these two goals will conflict, in most cases, we set our goal of the pause time and G1 will configure based on the goal.

MaxGCPauseMillis

The default value of it is 200, G1 will try to balance the throughout and the pause time based on this value. There are two directions in GC tuning:“Increase the throughout” means reducing the overall GC time.

“Improve the GC pause time” means doing GC more frequently, for example, it will reduce the size of Young region (eden, survivor region) to trigger the young GC more often.

Calculate the required memory based on monitoring

As the memory analysis showed, the required memory = the max heap size + JVM non-heap (GC, Metaspace, Code, etc.), considering the application might need more native memory when using JNI APIs, like java.util.zip.Inflater will allocate some native memory for (de)compression.

It’s hard to give an exact memory limit at first, we can always start with a loose limit and leave more room for the non-heap. After the application runs in the production for some time and the metrics are in place, we can adjust the memory limit based on the monitoring data.

To help the developers to realize if the memory limit is reasonable, we can set some thresholds for the application resource usage, if the app falls into these holes, we will generate some warnings to the developers.

Memory Request is too high = 0.6 >= mem_usage(p95) / mem_request

Memory Request is too low = 0.9 <= mem_usage(p95) / mem_request

Memory Limit is too low = 0.85 <= mem_usage(p95) / mem_limit

The prometheus we use is memory usage P95 in last 7 days.

1 | quantile_over_time( |

You can put the metrics on the monitoring dashboard and trigger alerts of warning level to the responsive team and iterate the memory limit accordingly, when you have more data I think this can also be automated.

Conclusion

For Java applications, we recommend set the max heap with either a static value or a reasonable ratio (40% ~ 60%) based on the heap usage, make sure to leave enough space for GC and other native memory usage.

For other applications, we can set up the required memory based on the monitoring data, we should always give enough free memory for the application, a good start is the three limitations we set above.