Anatomy of envoy proxy: the architecture of envoy and how it works

Envoy has become more and more popular, the basic functionality is quite similar to Nginx, working as a high performace Web server, proxy. But Enovy imported a lot of features that was related to SOA or Microservice like Service Discovery, Circuit Breaker, Rate limiting and so on.

A lot of developers know the roles envoy plays, and the basic functionality it will implement, but don’t know how it organize the architecture and how we understand its configuration well. For me, it’s not easy to understand envoy’s architecture and its configuration since it has a lot of terminology, but if the developer knew how the user traffic goes, he could understand the design of envoy.

Envoy In Servicemesh

Recently, more and more companies take Service Mesh to solve the communication problem among backend services, it’s a typical use case for envoy to work as a basic component for building a service mesh, envoy plays an important role and one of the service mesh solution Istio uses Envoy as the core of the networking.

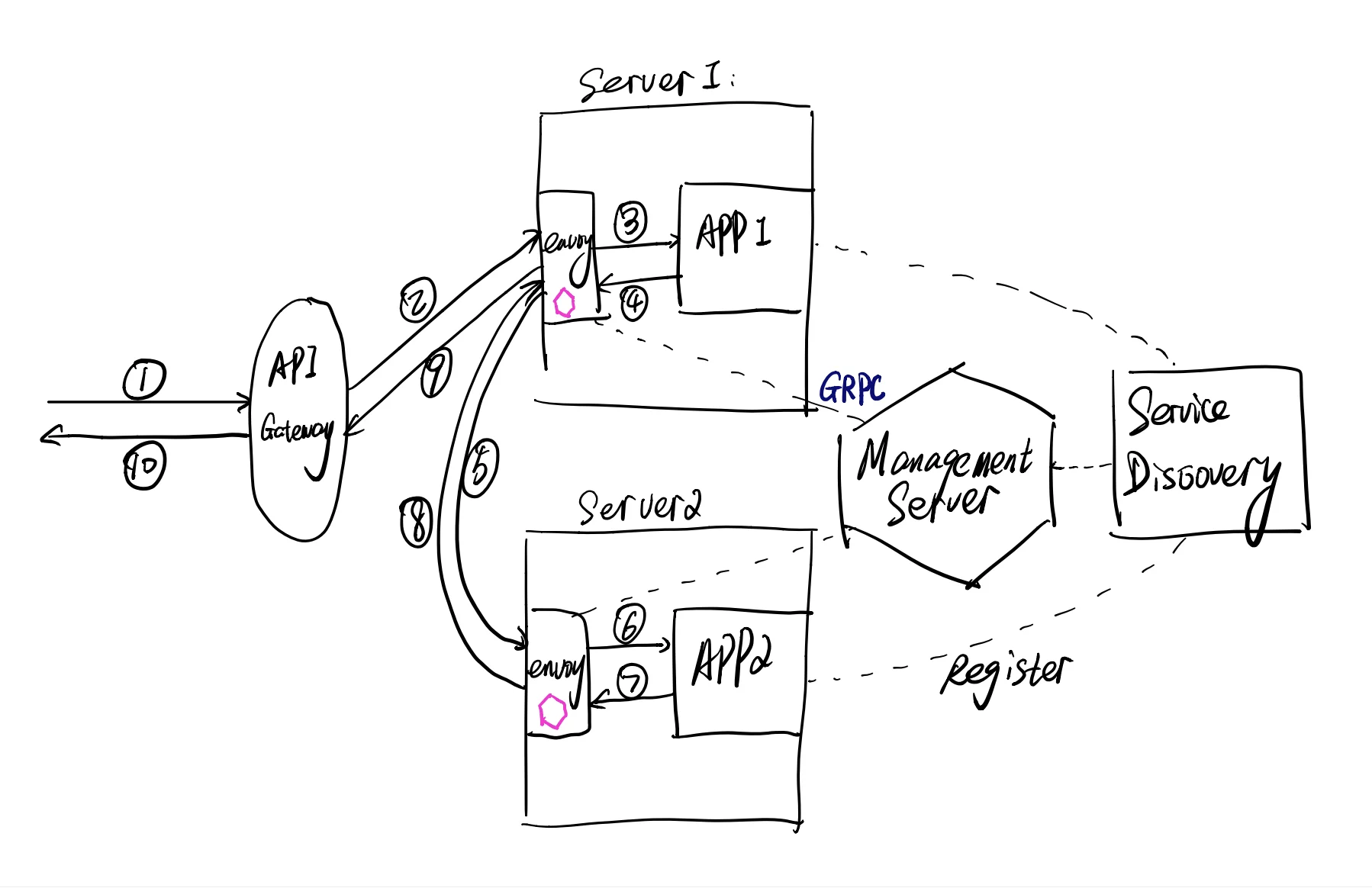

As pictured, the Envoy is deployed beside every application, this kind of application we call it Sidecar.

Let’s analyze how the user traffic moves.

- The user hits the website and the browser tries to query an API, the api gateway receives the user request.

- The API Gateway redirects the request to the backend server1 (in Kubernetes, it can be a Pod)

- The envoy on backend server receives this HTTP request and resolves it to the destination server and forwards the request to the local destination port which APP1 listens at.

- The APP1 receives requests, processes the bussiness logic and tries to call a dependent RPC service in APP2, the request first is sent to local envoy.

- The local envoy resolves the RPC service APP2’s IP address and port according to the management server and sends the RPC request to APP2 server.

- The server where the RPC service located at recevies the request, to be clear, it’s the envoy receiving the request, after the same logic like step 3.

- The APP2 processes the request and returns.

- The envoy forwards the response to server1.

- There’re two forwards being ignored, envoy(1) to APP1, APP1 to envoy(1). Then the envoy(1) returns the reponse to API gateway.

- The API gateway returns to the user.

The management server is responsible for telling envoy how to process the requests and where to forward.

Service discovery is where applications register themselves.

Ingress and Egress

As you can see, there’re two kinds of traffic within a server: ingress and egress.

- Any traffic sent to server, it’s ingress.

- Any traffic sent from server, it’s egress.

How to implement this transparently?

Setup IPtables to redirect any traffic to this server to the envoy service first, then envoy redirects the traffic to the real application on this server.

Setup IPtables to redirect any traffic from this server to the envoy service first and envoy resolves the destination service using Service Discovery, redirects the request to the destination server.

By intercepting the inbound and outbound traffic, envoy can implement the service discovery, circuit breaker, rate limiting, monitoring transparently, the developers don’t need to care about the details or integrate libraries.

Anatomy of envoy proxy configuration

The first important role of envoy in the service mesh is proxy, it receives requests and forwards requests.

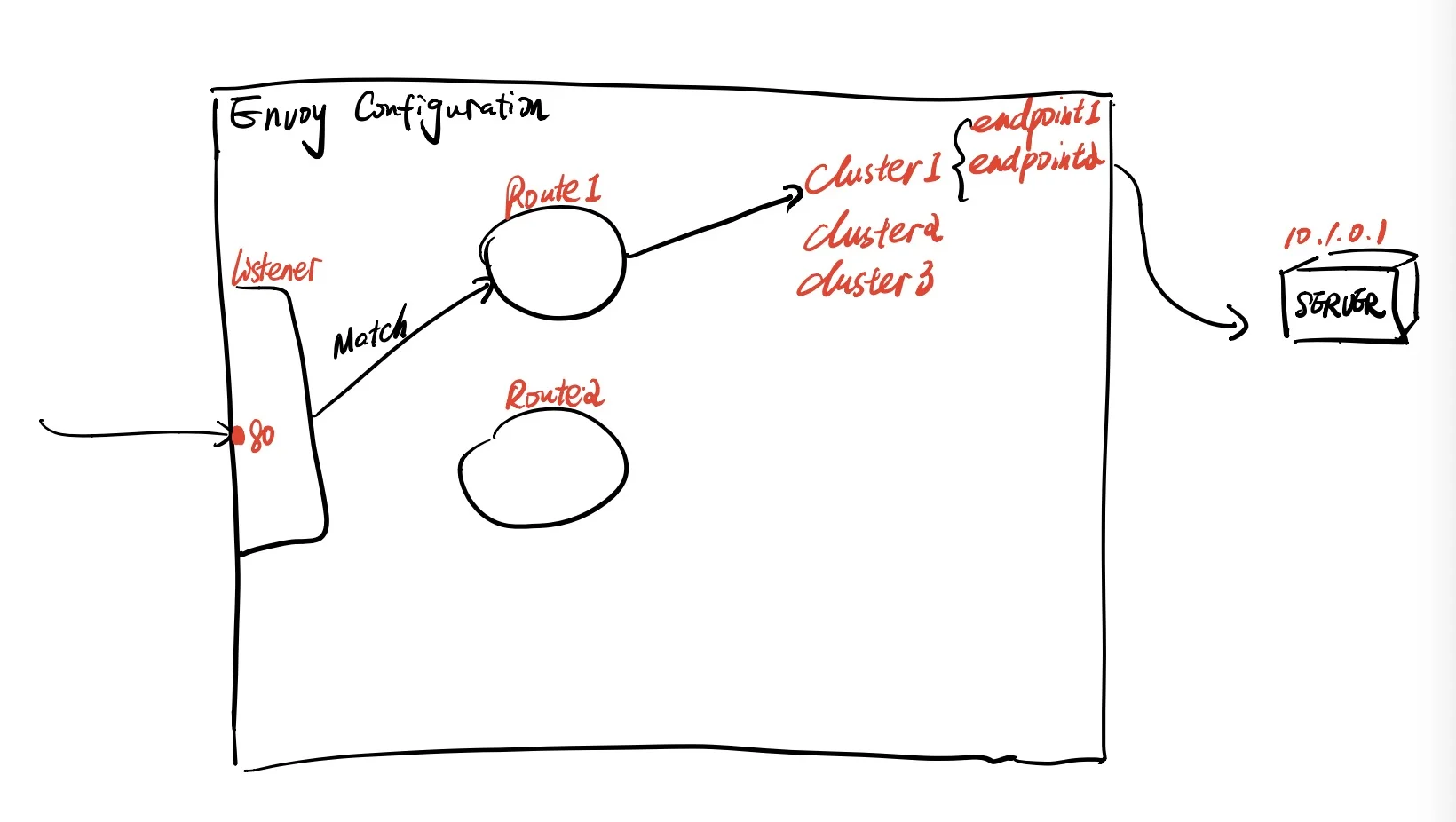

To see the components that make the proxy work, we can start with a request flow.

- A request reaches a port on the server which envoy listens at, we call this part listener.

- Envoy receives the request and tries to process this request according to some rule, the rule is route.

- The route processes the request based on the request’s metadata and tries to request the specific backend servers, the backend servers are called cluster.

- The concrete server IP:port behind cluster is called endpoint.

It’s the main part of envoy components in most of the proxy cases, similar to Nginx, you’re allowed to setup all the configuration by static files.

Here is an example with static configuration, you can see the full file here.

1 | static_resources: |

Don’t be nervous, you can understand this configuration easily by order:

- listener

It says the envoy listens at 0.0.0.0:80 - route

It tells how to process request, there’s a route named local_route, a rule matching wildcard domain and forwards the request toservice1cluster if the request path’s prefix matches/service/1, else forwards toservice2cluster if the request path’s prefix matchesservice/2. - cluster

Finally, theservice1cluster memtioned before is resolved to several endpoints, it’s the real address of the server, the address isservice1:80.

So the basic structure of the configuration is quite straightforward and easy to understand, if you want to manipulate the configuration and don’t know where to start, you can refer to this sample and every data model inside can be found in envoy documentation.

But most importantly, it’s allowed to use dynamic configuration which is mostly used in SOA and Microservice, it fits the situations where the service’s endpoints and route rules may change anytime.

For using dynamic resources, envoy supports setting an API server and divides the above components into different APIs or different resources within an API.

- LDS: listener

The listener discovery service (LDS) is an optional API that Envoy will call to dynamically fetch listeners. Envoy will reconcile the API response and add, modify, or remove known listeners depending on what is required. - RDS: route

The route discovery service (RDS) API is an optional API that Envoy will call to dynamically fetch route configurations. A route configuration includes both HTTP header modifications, virtual hosts, and the individual route entries contained within each virtual host. - CDS: cluster

The cluster discovery service (CDS) is an optional API that Envoy will call to dynamically fetch cluster manager members. Envoy will reconcile the API response and add, modify, or remove known clusters depending on what is required. - EDS: endpoint

The endpoint discovery service is a xDS management server based on gRPC or REST-JSON API server used by Envoy to fetch cluster members. The cluster members are called “endpoint” in Envoy terminology. For each cluster, Envoy fetch the endpoints from the discovery service.

The concept is equal to the static configuration, you can initialize the configuration by

1 | admin: |

The only static resource is the xds_cluster which is the management server cluster which provides a GRPC streaming API answering the LDS, CDS, EDS, RDS configuration.

As you notice, there’re only lds_config and cds_config inside the config file, that’s because rds is included in lds and eds is included in cds.

In Service Mesh architecture, the management server is the most important module, it always connects to a distributed service discovery system which can be Etcd, Zookeeper, Consul, Eureka or the Kubernetes(Kubernetes often used Etcd as their service discovery component), and provide some interfaces to manipulate the configuration to implement near-realtime configuration change.

Conclustion

Understanding how the user traffic flows makes it easier for me to understand the component design of envoy and have a first glimpse of the envoy configuration, then you can start with more features later.